When disaster strikes, every second counts. At the 2025 Dynamo Hackathon in Nashville, our team tackled one of the most pressing challenges facing emergency response teams today: how to rapidly deploy life-saving infrastructure when communities need it most. The result? An AI-powered disaster response system that transforms how we prepare for and respond to emergencies.

Our team—Enrique Galicia, Aaron Coffman, Adrián Fernández, Daniel Drennen, Colin Molnar, and Pawan Bhat—came together with a shared vision: reimagine disaster response through the power of automation, intelligence, and speed. We set out to build a resilient, adaptive, and intelligent safety net capable of saving lives and minimizing disruption when disaster strikes.

The Challenge: Speed Meets Complexity

Natural disasters don’t wait for perfect planning. Whether it’s flooding, wildfires, or hurricanes, emergency responders face an impossible challenge: They need to quickly deploy temporary infrastructure—shelters, medical facilities, supply distribution centers—in unfamiliar terrain, often with limited information and under extreme time pressure.

Traditional disaster response planning is manual, time-consuming, and relies heavily on human decision-making under stress. Responders must:

- Analyze unfamiliar terrain and infrastructure

- Identify safe zones for emergency facilities

- Calculate optimal routes through potentially damaged areas

- Deploy resources without complete information

- Make life-or-death decisions in minutes, not hours

Every minute of delay can mean the difference between life and death. The AEC industry has powerful tools for site analysis, pathfinding, and optimization, but these capabilities have never been applied to real-time disaster response at scale.

Masters of Disasters is a proposed intelligent system that would automate emergency infrastructure deployment from disaster detection to facility placement. By combining real-time disaster monitoring, AI-powered site analysis, and advanced pathfinding algorithms, we envision a workflow that could analyze a disaster site and deploy emergency infrastructure in minutes instead of hours.

The conceptual system works through four integrated stages:

- Real-Time Disaster Detection and Classification: We integrated disaster monitoring systems with FEMA, NOAA, and USGS databases to detect and classify disasters in real time. When an event occurs—whether flooding, earthquake, or other emergency—the system automatically receives location coordinates, disaster type, and severity data.

- Automated Site Analysis with Forma: Using Autodesk Forma, the system pulls comprehensive real-world site data for the affected area: topography, existing infrastructure, road networks, vegetation, and accessibility. This gives responders an instant, data-rich picture of the disaster zone without manual site surveys.

- AI-Powered Site Suitability Analysis: The heart of our system is AI-driven analysis that processes topographic data to identify optimal locations for emergency facilities. The algorithm considers:

-

- Elevation and flood risk

- Proximity to affected populations

- Safety and accessibility

- Ground conditions for rapid construction

- Distance from hazard zones

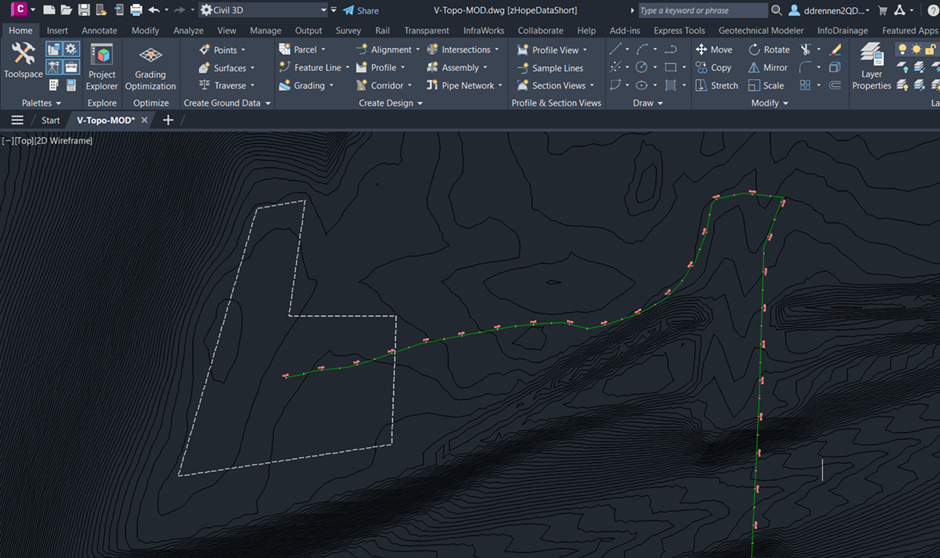

- Optimal Pathfinding with VASA: Once facility locations are determined, the system uses VASA (Visual Area Space Analysis) within Dynamo to calculate the shortest, safest routes for emergency responders. VASA processes topography curves and meshes to find paths that account for:

-

- Damaged or blocked roads

- Debris fields

- Topographic barriers

- Multiple access points

- Traffic flow considerations

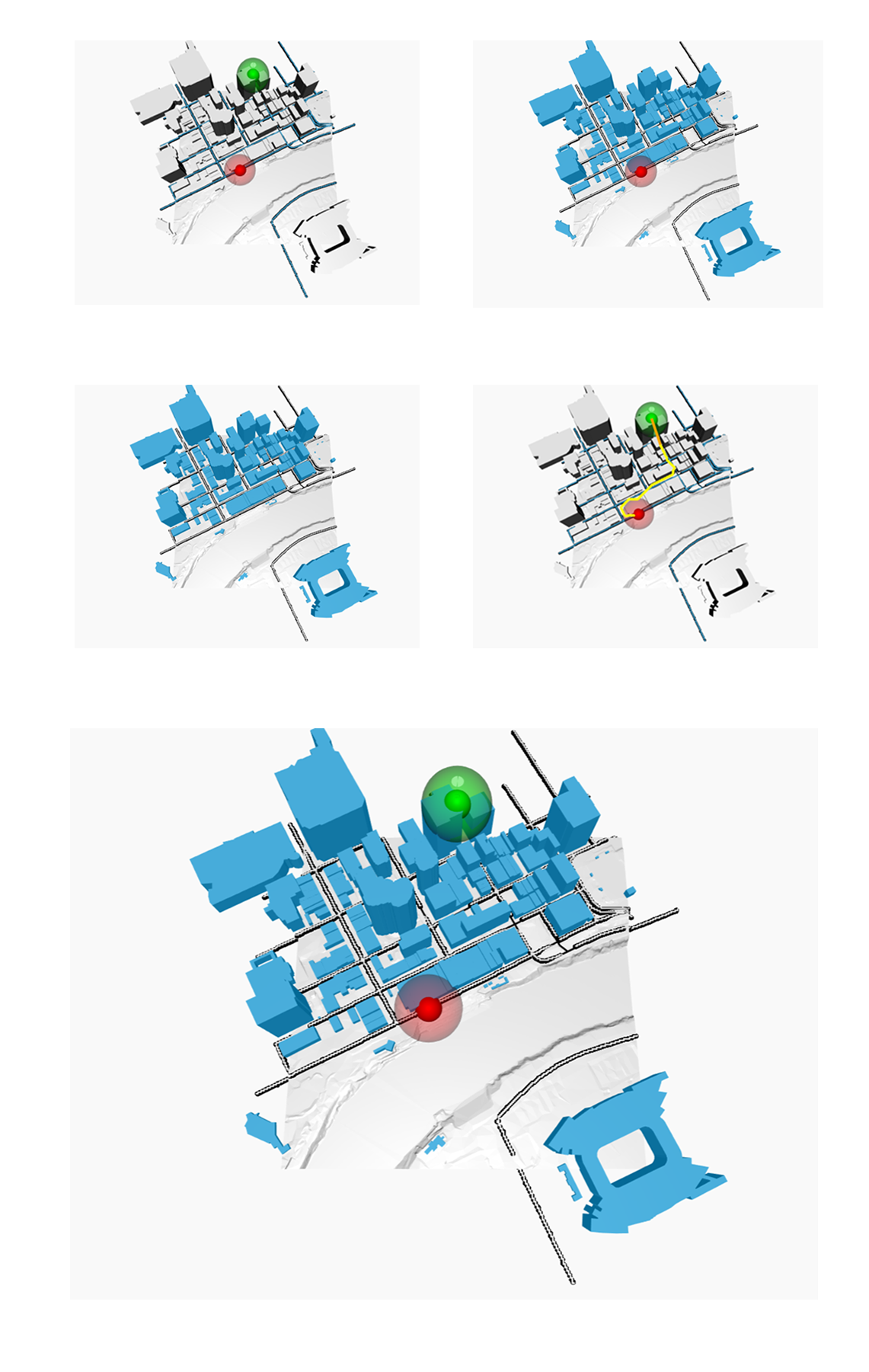

Progression using VASA to identify two points with roads, then match possibilities and use closest distance to move between them.

The Technical Foundation: Agentic Tools and Integration

During the hackathon, we focused on proving the conceptual framework and identifying the technical requirements for implementation.

What We Built:

- Conceptual architecture for Model Context Protocol (MCP)-Dynamo integration

- Visual demonstrations of multiple disaster scenarios (flooding, hurricanes, earthquakes, biological threats)

- Identification of required agentic tool capabilities

- Mockups of user interfaces and workflow diagrams

- Proof-of-concept for data visualization and conflict detection logic

What We Validated:

- The workflow is technically feasible with existing tools (Forma, Dynamo, VASA, MCP)

- Multiple disaster types can use the same core architecture

- Emergency management professionals expressed strong interest

- The approach scales beyond just disaster response to other rapid-deployment scenarios

The Agentic Tools Framework

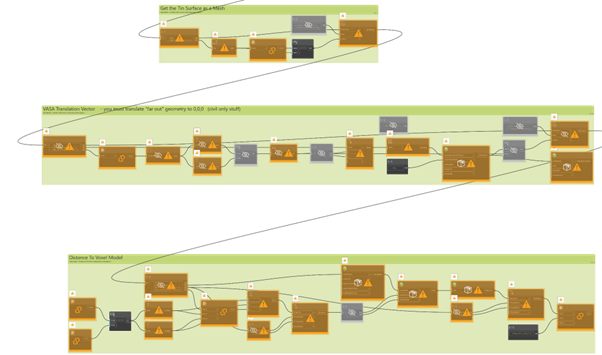

Our proposed system would leverage several cutting-edge Dynamo capabilities:

Custom Agentic Nodes:

- Toposolid extraction nodes that automatically slice terrain data at specified hazard levels (flood elevations, earthquake damage zones, etc.)

- Geometric intersection nodes that identify conflict points between infrastructure and hazard zones

- Path analysis nodes that call VASA algorithms for optimal routing

- Geometry allocation nodes that place shelter and facility geometry at identified safe locations

Real-World Data Integration:

The system seamlessly pulls data from multiple sources:

- Live disaster feeds from government agencies

- Site topography and infrastructure from Forma

- Road networks and accessibility data

- Building footprints and facility locations

These components exist independently—our innovation is in connecting them through an intelligent MCP architecture that orchestrates the entire workflow.

The Architecture Decision: MCP Integration Strategy

One of the most critical insights from the hackathon was recognizing how Model Context Protocol (MCP) could revolutionize disaster response automation. We have the option of two distinct architectural approaches for production implementation since both features were integrated in the hackathon:

Option 1: Dynamo Nodes Generated by MCP

In this approach, MCP servers would dynamically generate custom Dynamo nodes for each disaster routine:

MCP Server → Generates Custom Nodes → Dynamo Executes Locally

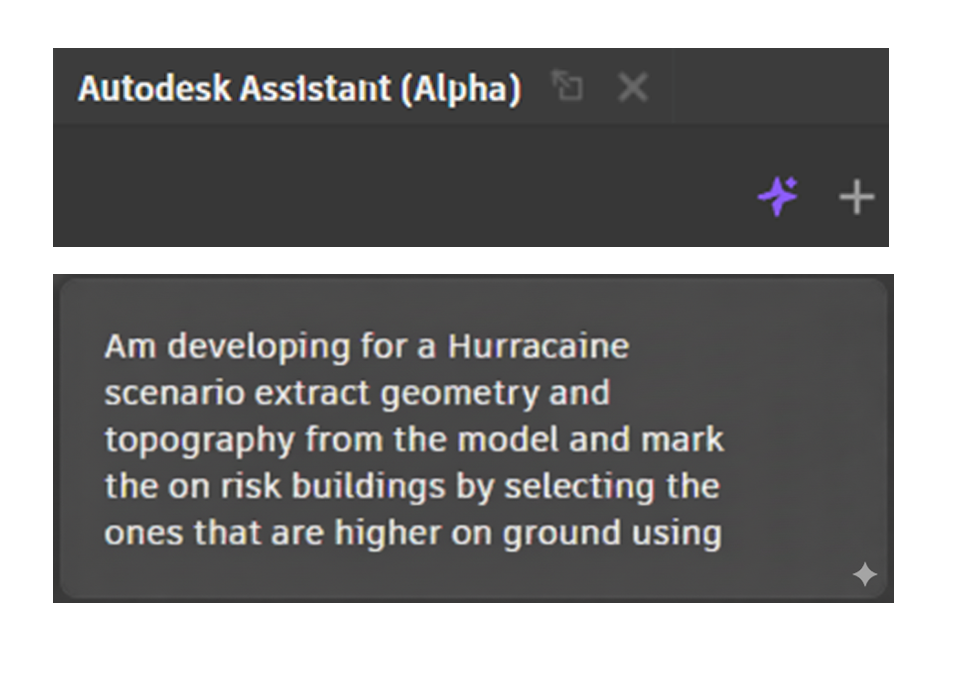

The MCP (Model Context Protocol) requires a server enabling the functions to run and a client. The first can be achieved through the Alpha version of the Dynamo Autodesk Assistant by following a routine of elements to be triggered.

Autodesk Assistant can create nodes, Python scripts, or a mix of both. Autodesk Assistant is an MCP client running with the Dynamo MCP server, which enables Dynamo functions and leverages Autodesk AI.

Or you can use half-baked functions using the Agent Node:

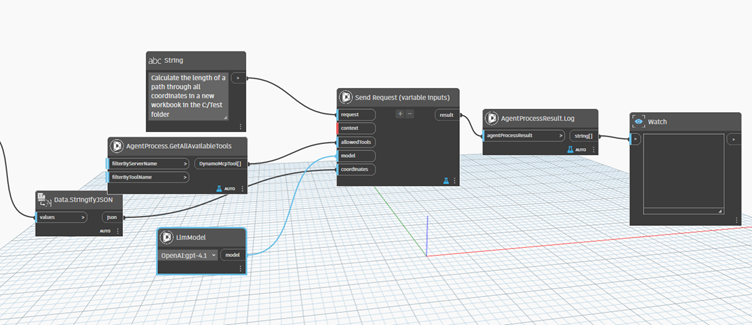

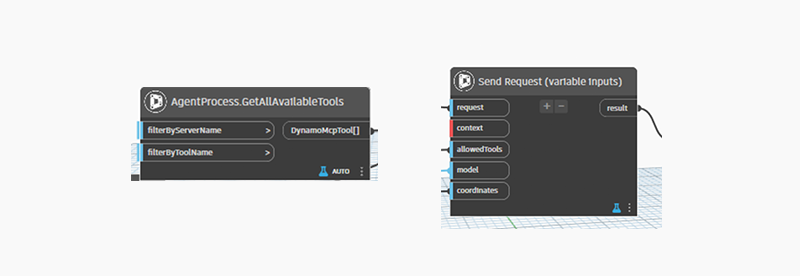

The main component for this workflow is the AgentProcess.GetAllAvailableTools node, which retrieves MCPs loaded in Dynamo, such as the Revit MCP, to execute tasks.

You can also retrieve additional data from other MCPs. We tested the Excel MCP to access data from a spreadsheet. You can use the Send Request (variable inputs) node to enable functionality from other applications and bring it into Dynamo. This node uses Autodesk AI models and allows you to supply context, request, and other inputs.

Advantages:

- Dynamo remains the primary execution environment

- Leverage existing Dynamo Player deployment infrastructure

- Engineers can visualize and debug the graph structure

- Works within established IT security perimeters

- No external API dependencies during execution

Challenges:

- Node generation adds complexity

- Updates require re-generating and re-deploying nodes

- Limited flexibility once nodes are compiled

Option 2: MCP Server Executing Routines Directly

Alternatively, the disaster response routines live within MCP servers, called by lightweight Dynamo wrapper nodes:

Dynamo Thin Client → MCP Server API → Executes Complete Routines → Returns Results

A secondary alternative to this is to create an MCP with the functions using the Dynamo MCP, Revit API, or the Dynamo DLL.

Similar to the use of MCP on Claude, running them in the Dynamo Agent Alpha version enables workflows to be used as tools with Autodesk AI LLM services.

Advantages:

- Centralized routine management and updates

- Real-time algorithm improvements without client updates

- Easier integration with live disaster data feeds

- Scales across multiple Forma/Revit instances

Challenges:

- Requires reliable network connectivity

- API rate limits and latency considerations

- More complex authentication and security architecture

Our Recommended Hybrid Approach

After extensive discussion, we’re leaning toward a hybrid architecture that leverages the strengths of both:

- Core Analysis Engine: MCP servers host the intelligence—AI models, disaster databases, optimization algorithms, real-time data integrations

- Dynamo Execution Layer: Lightweight nodes in Dynamo handle:

-

- Geometry extraction from Revit/Forma

- Visualization and user interaction

- Local geometry operations

- Final model updates

- Smart Caching: Results cache locally so repeated analyses don’t require constant API calls

- Graceful Degradation: If MCP servers are unreachable, fall back to last-known-good algorithms running locally

This architecture enables:

- Rapid iteration: Update algorithms on MCP servers without touching client deployments

- Offline capability: Core functions work even without connectivity

- Natural language control: Emergency coordinators can describe scenarios in plain English

- Multi-platform: The same MCP servers can serve Dynamo, Forma, custom web interfaces, or mobile apps

Why This Matters for Disaster Response

The MCP architecture isn’t just technically elegant—it’s operationally critical for emergency response:

Speed of Deployment: When a disaster strikes, there’s no time to update software. MCP servers can push updated algorithms instantly to all connected clients.

Learning from Each Event: Every disaster response generates data. MCP servers can incorporate lessons learned and improve recommendations in real-time.

Coordination Across Jurisdictions: Multiple agencies using different tools can all connect to the same MCP infrastructure, ensuring consistent analysis and coordinated response.

Future-Proofing: As new AI models emerge or disaster science evolves, the core intelligence upgrades without touching the hundreds or thousands of deployed Dynamo graphs.

This architectural approach transforms Masters of Disasters from a single-purpose tool into a platform for continuous improvement in emergency response capabilities.

Demonstration Scenarios: Visualizing the Possible

During the hackathon, we developed a series of conceptual demonstrations showing how the system would respond to different disaster types. While we didn’t complete a fully functional end-to-end system in 6 hours, we proved the core concepts and visualized the workflow for multiple scenarios:

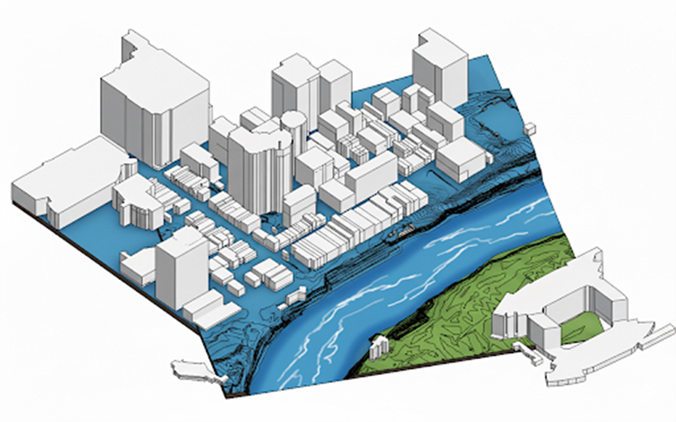

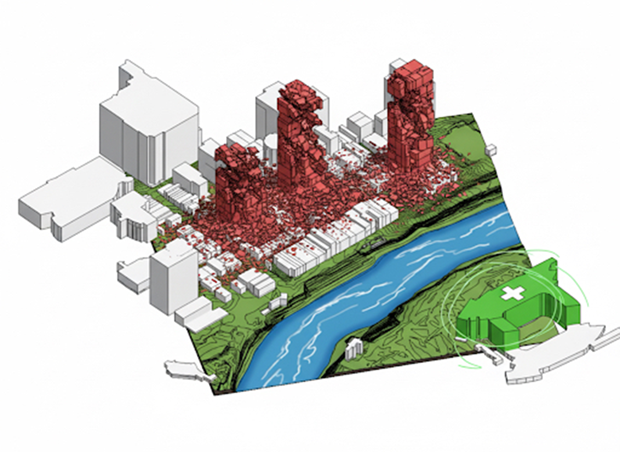

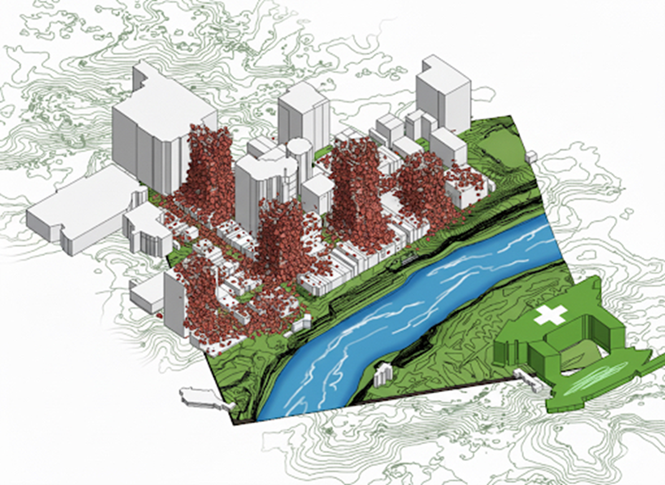

Flooding Scenario

When rising water threatens a community, the system would:

- Receive flood coordinates and predicted water levels

- Extract site data showing terrain elevation

- Identify conflict points where buildings intersect with flood zones (shown in blue)

- Calculate safe zones at higher elevations (shown in green)

- Deploy emergency shelters on high ground with optimal accessibility

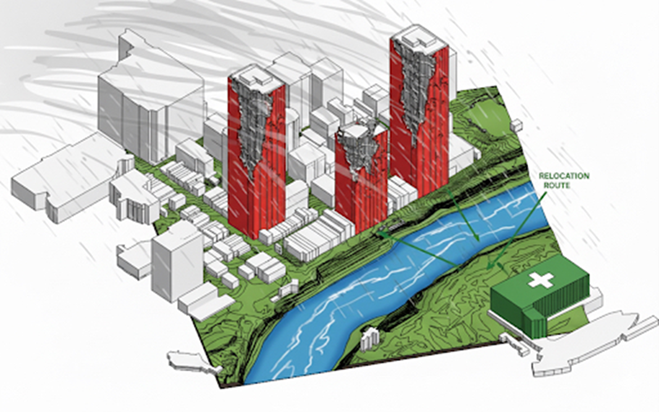

Hurricane Scenario

High winds and storm surge require different shelter strategies:

- Identifies structurally compromised buildings (shown in red)

- Prioritizes wind-protected evacuation routes

- Places reinforced shelters away from coastal exposure

Earthquake Scenario

Structural damage assessment drives facility placement:

- Maps damaged infrastructure requiring evacuation (red zones)

- Identifies open spaces safe from falling debris

- Creates medical triage facility locations

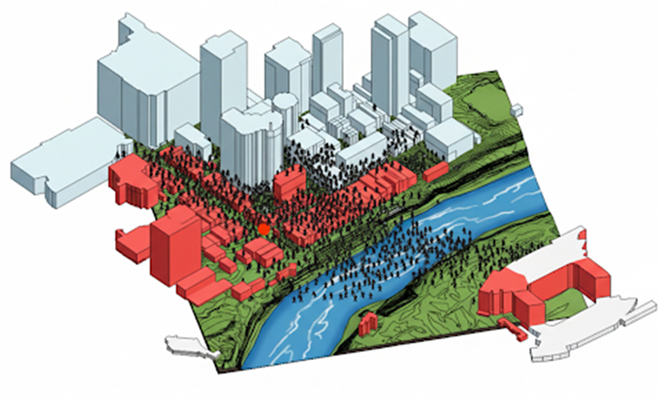

Biological Contamination

Pandemic or chemical spills require isolation protocols:

- Visualizes contamination spread patterns

- Creates quarantine zones and containment perimeters

- Places isolation facilities with controlled access points

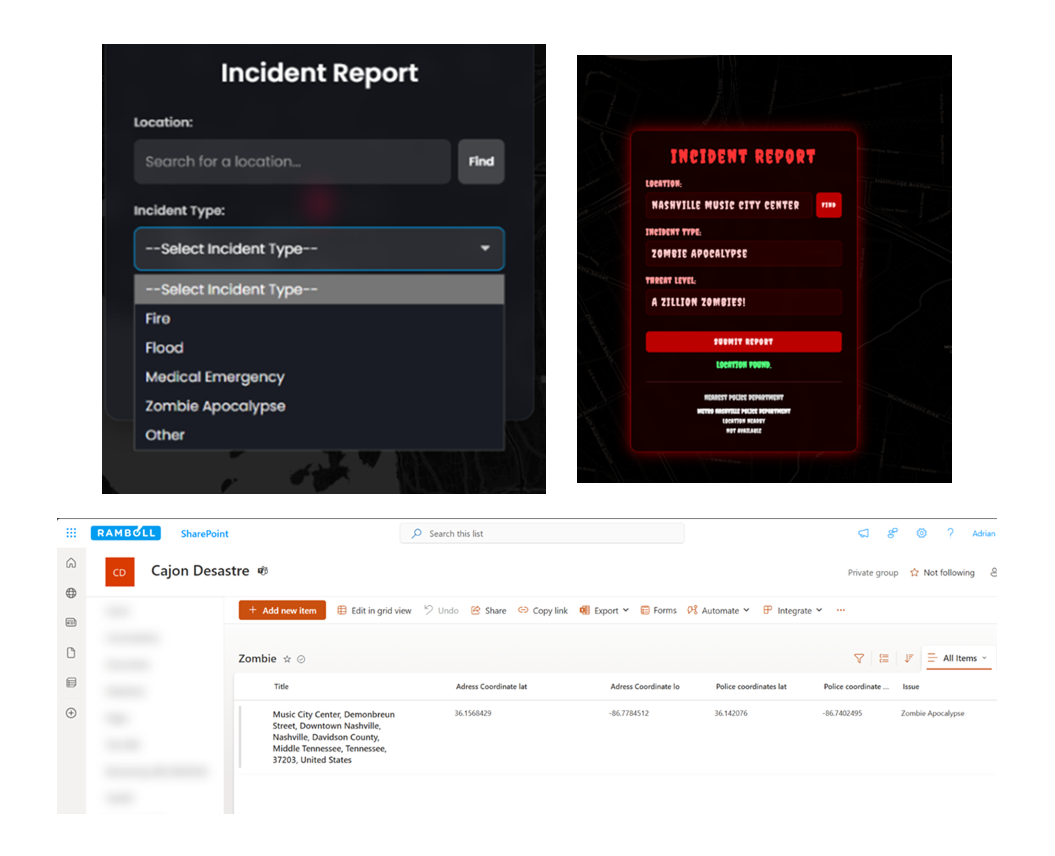

And Yes… Zombie Apocalypse

Because every good hackathon needs a creative scenario:

- Identifies safe zones away from population centers

- Creates defensible shelter clusters

- Optimizes supply distribution without exposure risk

Each scenario uses the same core agentic tools:

- Toposolid extraction against hazard levels

- Geometric intersection to detect conflicts

- Path analysis for optimal routing

- Geometry allocation for shelter placement

The variety of scenarios proved our approach was adaptable—the same fundamental workflow could address vastly different disaster types by adjusting the hazard parameters and placement constraints.

What We Achieved: Proof of Vision

In just 6 intense hours, we didn’t build a finished, deployable system—but we proved something arguably more important: that this vision is achievable and worth pursuing. The compressed timeframe forced us to focus on what truly mattered: validating the concept, identifying the architecture, and demonstrating the potential. Our proof of concept demonstrated:

Conceptual Validation: We mapped the complete workflow from disaster detection through facility deployment, identifying every data integration point, analysis step, and automation opportunity. In 6 hours, we did the strategic thinking that typically takes weeks.

Visual Demonstration: Through multiple disaster scenarios, we showed how the same core logic adapts to different emergency types—flooding, hurricanes, earthquakes, and more. Each scenario proved the adaptability of our approach.

Agentic Tool Architecture: We defined the specific agentic capabilities needed:

- Toposolid extraction routines

- Geometric conflict detection

- Path analysis integration with VASA

- Automated geometry placement algorithms

Stakeholder Enthusiasm: The response from judges, emergency management professionals, and fellow developers validated the real-world need and commercial viability.

Clear Implementation Path: Most importantly, we identified exactly what needs to be built and how to architect it for production deployment.

For organizations like FEMA and disaster relief agencies, our hackathon presentation demonstrated a compelling future: when disasters strike, AI-powered systems could have deployment plans ready in minutes instead of hours. The technology exists—now it’s about integration and implementation.

The Development Journey: Challenges and Breakthroughs

Like any hackathon project, Masters of Disasters came with significant technical challenges. Working with Forma’s site data API required careful data parsing and transformation. Getting VASA pathfinding to work with real topographic meshes—not just simplified test geometries—required multiple iterations and creative problem-solving.

One of our biggest breakthroughs came when we successfully integrated the conflict detection system. Initially, we struggled to reliably identify which facilities would be impacted by flooding. The solution came from using geometric solid intersections between the toposolid at the flood level and the building geometry. Once we had reliable conflict detection, the automatic relocation logic fell into place.

The AI-powered site suitability analysis also evolved significantly during development. Early iterations produced recommendations that looked good on paper but didn’t account for real-world constraints like soil conditions or existing utility infrastructure. We refined our prompts and added additional data layers to ensure the AI’s recommendations were genuinely deployable.

Future Steps to Follow

While our hackathon prototype focused on general scenarios, the potential applications are far broader. Future iterations of Masters of Disasters will:

Expand Disaster Type Coverage

- Earthquake response with structural damage assessment

- Wildfire evacuation planning with smoke and wind modeling

- Hurricane preparedness with storm surge prediction

- Pandemic response with facility isolation requirements

Enhance AI Capabilities

- Predictive modeling to pre-position resources before disasters strike

- Machine learning from past disaster responses to improve recommendations

- Natural language interfaces for emergency coordinators

- Real-time updates as disaster conditions evolve

Integrate with Emergency Systems

- Direct API connections to FEMA response systems

- Mobile apps for field coordinators

- GIS integration for mapping and visualization

- IoT sensors for real-time disaster monitoring

Deploy Automated Resource Allocation

- Supply chain optimization for emergency materials

- Personnel deployment and shift scheduling

- Equipment tracking and utilization

- Cost estimation and budget management

Leverage Dynamo as a Service (DaaS) Integration

- Deploy the system on Autodesk Forma through DaaS, allowing emergency management agencies to access disaster response planning tools without specialized software installations.

Key Takeaways and Lessons Learned

This hackathon reinforced several critical lessons about building impactful technology:

- Focus on Real Impact: We didn’t chase flashy features. Every component of our system exists to save lives. That clarity of purpose kept us focused when decisions got tough.

- Leverage Existing Infrastructure: Rather than building everything from scratch, we integrated with proven platforms like Forma and VASA. This let us focus on the novel aspects of disaster response automation.

- Start Simple, Iterate Fast: Our first prototype was rough. But by getting something working quickly, we could test, learn, and improve.

- Diverse Teams Win: Our team’s varied backgrounds—architecture, engineering, systems design, program management—meant we approached problems from multiple angles. This diversity was our strength.

- The Future is Agentic: Dynamo’s agentic tools opened possibilities we couldn’t have achieved with traditional scripting. AI isn’t just about answering questions—it’s about autonomous systems that can analyze, decide, and act.

- Technology Can Save Lives: This wasn’t an academic exercise. The tools we built during this hackathon could genuinely help communities prepare for and respond to disasters. That responsibility motivated us every step of the way.

The Bigger Picture: AI in Emergency Response

Masters of Disasters represents something larger than a single hackathon project. It’s a glimpse into how AI and automation can transform emergency response across the board. As climate change increases the frequency and severity of natural disasters, we need tools that can scale to match the challenge.

The AEC industry has spent decades developing sophisticated tools for designing buildings and infrastructure. Now, we’re learning to apply those same capabilities to emergency response. The marriage of BIM, AI, and real-time data creates possibilities that didn’t exist even a few years ago.

For firms, agencies, and organizations involved in disaster response, the message is clear: automation isn’t coming someday—it’s here now. The question isn’t whether AI will transform emergency management, but how quickly we can deploy these capabilities to the people and places that need them most.

Conclusion: A Vision Worth Building

Hackathons are about possibilities. In just 6 hours—a single intense work session—we didn’t build a complete disaster response system, but we proved it’s possible, visualized how it would work, and identified the architecture to make it real.

What sets Masters of Disasters apart isn’t just the application to emergency response. It’s the recognition that Model Context Protocol represents a fundamental shift in how we build intelligent AEC applications. By separating the intelligence layer (MCP servers) from the execution layer (Dynamo, Forma, mobile apps), we create systems that can:

- Learn continuously from every disaster

- Update instantly without software deployments

- Coordinate seamlessly across jurisdictions and platforms

- Scale infinitely as demand requires

The challenge ahead is execution. We need partnerships with emergency management agencies, integration with government data systems, and validation from disaster response professionals. We need to build the MCP infrastructure, develop the algorithms, and prove the system works under real disaster conditions.

But we’re confident because the need is undeniable and the technology exists. Climate change is increasing disaster frequency and severity. Traditional manual planning can’t scale to meet the challenge. AI-powered automation isn’t a luxury—it’s becoming essential.

To the Dynamo and AEC community: This is what hackathons are for. Not just to build features, but to imagine new possibilities. Masters of Disasters started as a 6-hour sprint. With the right support and partnerships, it could save lives.

The next disaster is coming. Let’s make sure we’re ready.

Note from the Dynamo team: Would you like to try out MCP capabilities in Dynamo? Sign up for the Alpha today! More details in this Dynamo forum post.

Meet the Hackathon Team

Our diverse team brought together expertise from across the AEC and infrastructure industries:

The Masters of Disasters team thanks Autodesk, the Dynamo community, and the hackathon organizers for creating the space to imagine bold solutions. We acknowledge the emergency responders worldwide whose dedication inspired this vision, and we’re committed to turning this concept into reality.